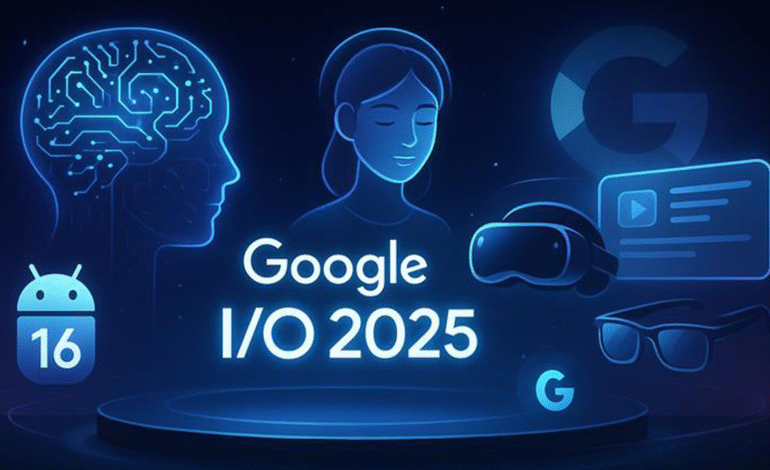

The AI Pivot: Inside Google’s Bold Bid to Redefine the Future of Technology

At Google I/O 2025, the company delivered more than a series of product updates. It unveiled a sweeping reorientation toward artificial intelligence, signaling a shift that could redefine the future of digital interaction. After facing mounting competition, especially from OpenAI and Microsoft, and increasing scrutiny over its search dominance, Google used its annual developer conference to present a cohesive and ambitious strategy. It is no longer simply responding to the AI wave; it is attempting to lead it.

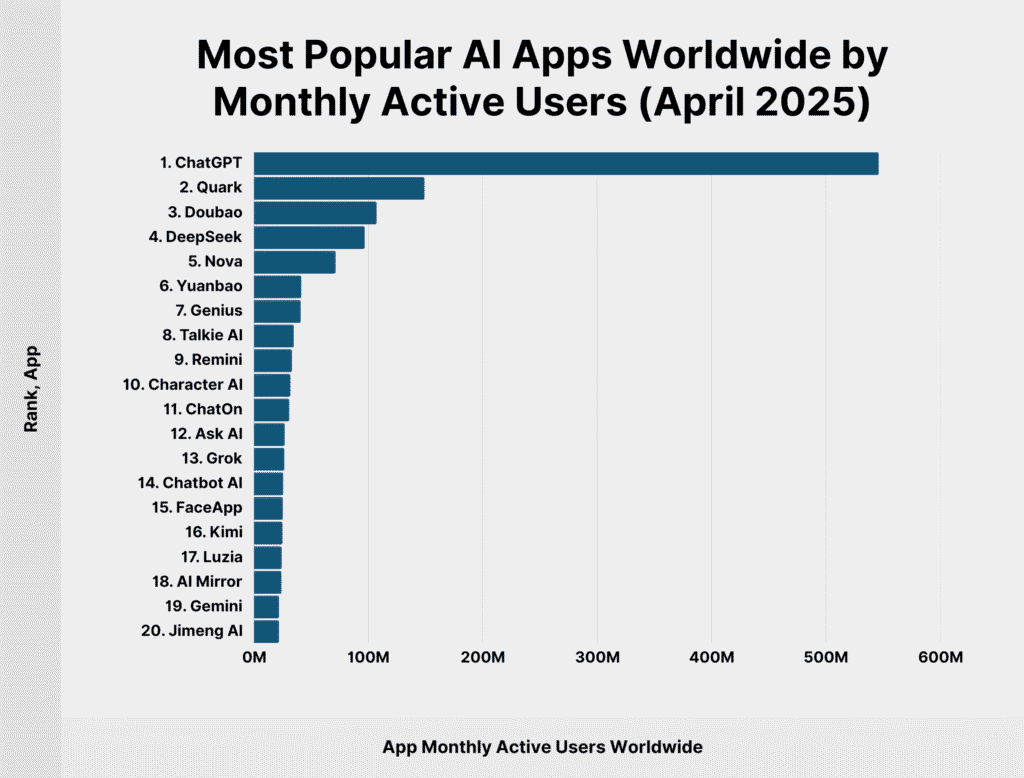

This pivot has been shaped in large part by the meteoric rise of generative AI tools like ChatGPT, which recently surpassed 100 million weekly active users. As more users flocked to conversational models to solve problems, learn, and work, the traditional search interface began to look outdated. The result was a perceptible erosion of Google’s central role as the gateway to online information. That loss of user intent and attention translated into a loss of strategic control, one that Google could not afford to ignore.

The company’s answer is to fundamentally reimagine Search itself. With the nationwide rollout of “AI Mode” to all users in the United States, Google is moving away from its decades-old paradigm of keyword queries and lists of links. Instead, users are invited to engage in contextual, natural language conversations with a model designed to understand intent, nuance, and the broader context of a request. According to CEO Sundar Pichai, the shift reflects the culmination of years of research now becoming reality. Rather than layering AI onto legacy systems, Google is rebuilding the experience from the ground up.

This transformation is powered by Gemini 2 point 5, the newest member of Google’s family of foundation models. Gemini is no longer just a language model. It is described as a world model, one capable of understanding cause and effect, interpreting physical environments, and handling real-time multimodal inputs across text, image, audio, and video. A newly introduced “Deep Think” mode allows Gemini to reason through complex problems and generate more considered responses, addressing a demand for models that go beyond quick or surface-level answers.

None of this would be possible without the computational horsepower to match. Google unveiled Ironwood, its seventh-generation Tensor Processing Unit. Each pod delivers 42 point 5 exaflops of performance, representing a tenfold increase over the previous generation. This leap enables Google to scale large model inference efficiently. Alongside it, Gemini 2 point 5 Flash offers comparable results while consuming 20 to 30 percent fewer tokens, optimizing performance for cost. In a field where inference expenses are quickly becoming a bottleneck, this focus on efficiency could prove decisive.

However, the company’s ambition goes beyond improving accuracy or performance. A central theme at I O 2025 was agentic AI, which shifts the role of AI systems from passive assistants to proactive agents. Project Astra, a prototype assistant capable of combining language understanding with live visual context, exemplifies this evolution. So does Jules, an asynchronous AI coding agent designed to handle extended development workflows. These systems are not merely reactive. They anticipate needs, retain memory across sessions, and collaborate more like digital coworkers than simple tools.

This transition evokes the early days of the smartphone, when devices moved from being communication tools to fully integrated computing platforms. Agentic AI may similarly become the foundation of new digital workflows, both in consumer life and enterprise operations. To that end, Google is embedding Gemini into everyday environments. Whether through Android Auto, WearOS, or Google TV, the idea is to make AI ambient and responsive, removing the need for traditional interfaces. A recent 150 million dollar investment in Warby Parker signals further intent to expand into AI-native hardware, suggesting that Google sees tight physical integration as essential for seamless user experiences.

Perhaps the boldest move from Google this year is its foray into premium AI services. The launch of Gemini Ultra, priced at 249 point 99 dollars per month, breaks from Google’s longstanding tradition of offering free or ad-supported products. It reflects a conviction that its models are now capable enough to support enterprise-grade pricing. This shift also hints at a larger monetization strategy, as Google begins to position AI not as a feature but as core business infrastructure.

These announcements cast a long shadow over the competition. Microsoft, with its Copilot suite integrated across Windows, Office, GitHub, and Azure, has been one of the most aggressive players in enterprise AI. While Google leads in infrastructure and consumer reach, Microsoft’s cohesive product story gives it an edge in user adoption across productivity workflows. Google must now prove it can deliver a similarly unified experience across its sprawling ecosystem.

Smaller but significant challengers are also gaining momentum. Anthropic’s Claude has built a strong reputation for consistent and grounded responses, especially among users prioritizing thoughtful dialogue. Perplexity AI offers a hybrid experience that combines conversational interaction with web search transparency, giving users confidence in its answers. Google’s introduction of Deep Think appears designed to address this new class of competitor, especially as users begin to demand slower, more reasoned outputs.

Meanwhile, niche players like Midjourney, Runway, and ElevenLabs continue to outperform Google in specialized areas such as image synthesis, video generation, and voice cloning. Google’s broad but general-purpose approach may struggle to match the depth and refinement of these focused platforms, which have cultivated loyal followings among creative professionals.

Yet Google’s most persistent weakness may be its own complexity. While Microsoft has unified Copilot across its ecosystem, Google’s services—from Gmail to Docs to Android—often feel siloed. The lack of a singular AI interface across products is noticeable. Frequent changes to product branding and abrupt feature removals have also contributed to user hesitation. In the enterprise space, where stability and clarity are essential, this pattern can erode confidence.

On top of that, regulatory scrutiny is intensifying. As AI becomes more embedded in information access, governments around the world are beginning to examine the implications more closely. With its vast user base and data assets, Google is likely to face more pressure than smaller players. The company must also continue competing in the talent war, as open-source communities and mission-driven AI labs increasingly appeal to top researchers who are wary of commercial constraints.

Still, Google’s strategic advantages remain formidable. Its infrastructure scale, model diversity, and product reach give it tools that few rivals can match. Gemini now has over 400 million monthly users, and adoption of its most advanced models has increased by 45 percent in recent months. That momentum offers a solid foundation, provided the company can maintain execution.

The ripple effects of Google’s AI evolution are already being felt. Apple is expected to focus on on-device AI with an emphasis on privacy and speed, while Meta continues to invest in the metaverse, a vision that currently feels disconnected from market demand. Amazon’s Alexa, once a pioneer in voice-based AI, is now competing in a world where multimodal, real-time agents like Astra set a far higher standard.

For creative industries, Google’s tools like Veo 3 and Flow are democratizing media production. By enabling synchronized audiovisual generation and advanced editing through natural language prompts, they lower the barrier to cinematic quality content creation. This could reshape workflows in advertising, entertainment, and independent filmmaking.

In software development, Jules introduces a new kind of coding assistant. Unlike autocomplete tools like GitHub Copilot, Jules operates asynchronously, maintaining context across sessions and engaging in iterative workflows. For small teams or solo developers, this could accelerate release cycles and reduce costs, while elevating the role of AI from helper to collaborator.

The larger story coming out of Google I O 2025 is that AI is no longer an experimental frontier. It is becoming the foundational infrastructure of computing. Google is embedding intelligence at every level—from chips to cloud to consumer interfaces. This is not just an evolution in functionality; it is a redefinition of what software and devices are for.

Whether this bold pivot succeeds will depend on execution, trust, and the ability to maintain coherence across a vast ecosystem. But one thing is clear. Google is no longer content to merely participate in the AI race. It wants to define the track, the rules, and the pace. The coming years will show whether the rest of the industry is prepared to follow.