Deepfake Dystopia

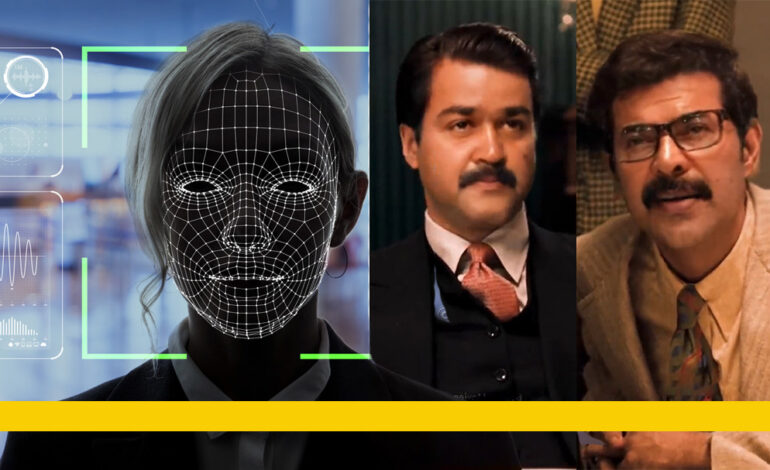

Recently, Malayalam social media platforms witnessed a viral media clip themed around a scene from the 1972 classic, The Godfather. However, what made this rendition a hit among Malayalis was the recreation of the roles of Michael Corleone (Al Pacino), Moe Greene (Alex Rocco), and Fredo Corleone (John Cazale) by replacing them with Malayalam superstars Mohanlal, Mammootty, and Fahad Fazil respectively through Deepfake, one of the cutting-edge artificial intelligence technology tools. Even though this tool has been there for a while, it is perhaps now for the first time that the common Malayali has taken note of this particular generative AI technology.

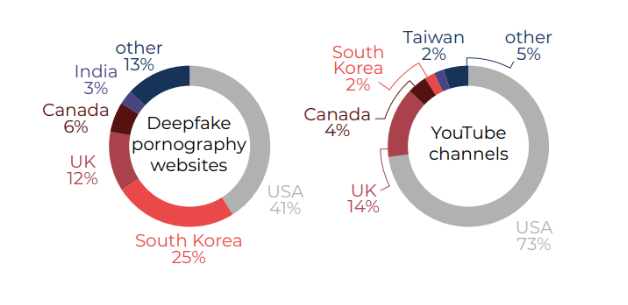

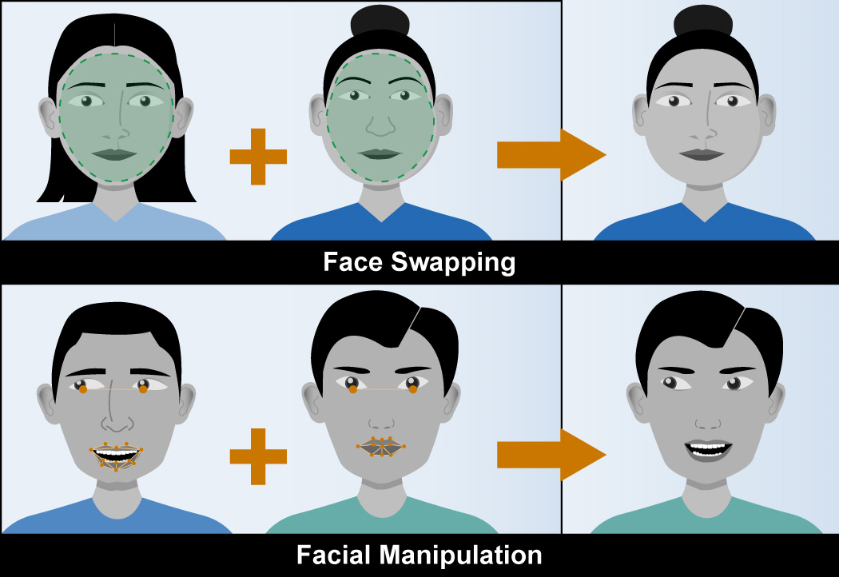

As everyone can see, it opens up a myriad of possibilities for the creative and entertainment industry. You can insert the images of popular figures into historical videos or make Hollywood classic actors speak Malayalam. Do you remember the famous scene from the Tamil movie Indian where Senapathi, the protagonist, receives a medal from Subhash Chandra Bose? Creating such computer graphics-related scenes has become a lot easier with the use of artificial intelligence. Unlike other generative AI techniques like ChatGPT and DALL·E 2, which deals with texts and images, deepfake is going to pose a bigger threat of being misused. Such artificially made videos/audios can be used for malicious purposes like blackmailing people, or damaging someone’s reputation by spreading misinformation and pornography involving their images. According to a 2018 report by leading AI research firm Deeptrace, a staggering 96% of the deepfake videos found online were pornographic. Earlier, high computational power, large datasets and exposure to advanced machine learning algorithms were absolutely necessary for creating a deepfake video. But with the latest advancement in generative AI technologies, people with limited machine learning expertise could make use of this technology with very few photos and fake videos. This obviously raises a lot of ethical, legal and social concerns for society.

Political Repercussions

Misinformation has become one of the key challenges faced by the online world for quite some time now. In the recent past, we witnessed a flood of photoshopped fake images claiming to be images of development, especially during elections. In a country like India where the Internet landscape and reach are extensive and accountability is minimal, the rapid spread of fake news, half-baked truths, gossip and unfounded rumours find eager recipients.

For example, a malicious user can create a deepfake video/audio of a politician saying something that he/she never said. Such misinformation can damage the politician’s reputation in the short and long terms affecting his electoral prospects. In fact, such tactics are already being deployed but we don’t have any specific mechanism in place to counter it.

For example, a few days ago, a case was registered in Karnataka against Amit Malviya, the national head of the BJP’s IT wing, for allegedly posting a fake video of Congress leader Rahul Gandhi. This created a political furore, and a long legal battle is ahead.

What is Deepfake and How did it all start?

In 2017, a fake sex video of Gal Gadot (the Wonder Woman star) appeared in the online platform Reddit. The porn video, purported to raise an allegation of incest against her, was made from deep-learning algorithms by affixing Gal Gadot’s face onto a porn star’s body. It originated from Reddit handle with the pseudonym ‘deepfakes’. This was believed to be the first public exposure of the effect of a deepfake technology and the technology was named after that Reddit handle.

How can you spot Deepfake?

As the machine-learning algorithms get smarter, it becomes increasingly harder to detect deepfake with human eyes. However, these are some tips to identify a deepfake created by a less-trained person.

- Unusual skin tones, color tones and lighting

- Differences in audio quality

- Unnatural body shape or movements

- Repeated unnatural eye movements

During the initial days of deepfake in 2018, researchers found that deepfake eyes don’t blink. That was mainly because the models were trained with images that had the eyes open (which was available in public domain). After this was observed, this issue was ‘fixed’ in the latter versions and deepfake faces started to blink. This only underscores the rat race that we engaged in. A lot of academic research is happening in this direction.

How are Governments dealing with Deepfake?

One can argue that this technology creates more problems than it solves. Revenge porns and politically insinuating videos will pose a tough challenge to legal systems across the world. Over the last few years, many countries, including the United States, China and the United Kingdom started amending the laws to address this new phenomenon.

In the United Kingdom, the Online Safety Bill amendment process started in 2022 to address issues such as revenge porn, voyeurism and deepfakes; and now the U.K. has criminalised exchange of deepfake images showing intimacy. China has also banned creation of deepfakes in January 2023 on the basis of the guidelines of the Cyberspace Administration of China (CAC), the country’s Internet regulator. The United States is planning to introduce curbs on sharing non-consensual AI-generated pornography, and making it illegal. A bill moved by Congressman Joe Morelle is currently under discussion. India too might consider moving in a similar direction to address the challenges posed by deepfakes.

References:

https://www.gov.uk/government/news/new-laws-to-better-protect-victims-from-abuse-of-intimate-images

https://regmedia.co.uk/2019/10/08/deepfake_report.pdf

https://www.vice.com/en/article/gydydm/gal-gadot-fake-ai-porn

What an hideously fascinating world unravels through this article . A complex web of deception and misinformation seems to be the future of mankind .

This article illustrates just how effectively AI can be used, to bring discord to our society. AI serves as both a boon and a bane in these times.